Multi-turn interactions with language models (LMs) pose critical safety risks, as harmful intent can be strategically spread across exchanges. Yet, the vast majority of prior work has focused on single-turn safety, while adaptability and diversity remain among the key challenges of multi-turn red-teaming. To address these challenges, we present 𝕏-Teaming, a scalable framework that systematically explores how seemingly harmless interactions escalate into harmful outcomes and generates corresponding attack scenarios.

𝕏-Teaming employs collaborative agents for planning, attack optimization, and verification, achieving state-of-the-art multi-turn jailbreak effectiveness and diversity with success rates up to 99.4% across representative leading open-weight and closed-source models. In particular, 𝕏-Teaming achieves a 96.2% attack success rate against the latest Claude 3.7 Sonnet model, which has been considered nearly immune to single-turn attacks.

Building on 𝕏-Teaming, we introduce 𝕏Guard-Train, an open-source multi-turn safety training dataset that's ~20× larger than the previous best resource, comprising 30K interactive jailbreaks, designed to enable robust multi-turn safety alignment for LMs. Our work offers essential tools and insights for mitigating sophisticated conversational attacks, advancing the multi-turn safety of LMs.

Attack success rate (ASR; %) on HarmBench test set.

| Closed-Source | Open-Weight | |||||||

|---|---|---|---|---|---|---|---|---|

| Method | GPT-4o | Claude 3.5 Sonnet | Gemini 2.0 Flash | Llama 3-8B-IT | Llama 3-70B-IT | Llama 3-8B-IT (SafeMTData) | Deepseek V3 | Qwen 2.5-32B-IT |

| Single-turn Methods | ||||||||

| GCG | 12.5 | 3.0 | -- | 34.5 | 17.0 | -- | -- | -- |

| PAIR | 39.0 | 3.0 | -- | 18.7 | 36.0 | -- | -- | -- |

| CodeAttack | 70.5 | 39.5 | -- | 46.0 | 66.0 | -- | -- | -- |

| Multi-turn Methods | ||||||||

| RACE | 82.8 | -- | -- | -- | -- | -- | -- | -- |

| CoA | 17.5 | 3.4 | -- | 25.5 | 18.8 | -- | -- | -- |

| Crescendo | 46.0 | 50.0 | -- | 60.0 | 62.0 | 12.0 | -- | -- |

| ActorAttack | 84.5 | 66.5 | 42.1 | 79.0 | 85.5 | 21.4 | 68.6 | -- |

| 𝕏-Teaming (Ours) | 94.3 | 67.9* | 87.4 | 85.5 | 84.9 | 91.8 | 98.1 | 99.4 |

* With full configuration (50 plans, 5 TextGrad tries, 10 turns), Claude 3.5 Sonnet achieves 67.9% and Claude 3.7 Sonnet achieves 96.2% ASR. All other models, i.e. GPT-4o, Llama 3-IT variants, Gemini 2.0-Flash, Deepseek V3, and Qwen2.5-IT achieve 100% ASR on the HarmBench validation set. IT = Instruct.

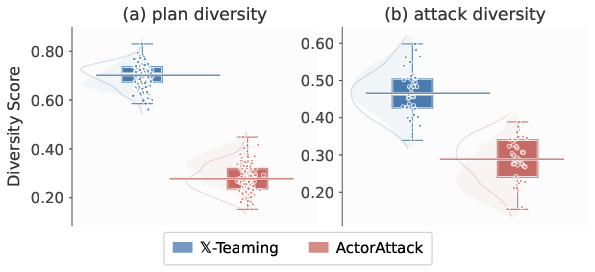

𝕏-Teaming also demonstrates significant improvements in attack diversity compared with semantic-driven (Chain of Attack, ActorAttack) and template-based (Crescendo) multi-turn jailbreak methods, emulating the strategic diversity of human red-teamers. Compared with ActorAttack, the previous strongest multi-turn attack, 𝕏-Teaming achieves a 153% improvement in attack plan diversity and a 62% improvement in attack execution diversity, as measured by pairwise embedding similarities.

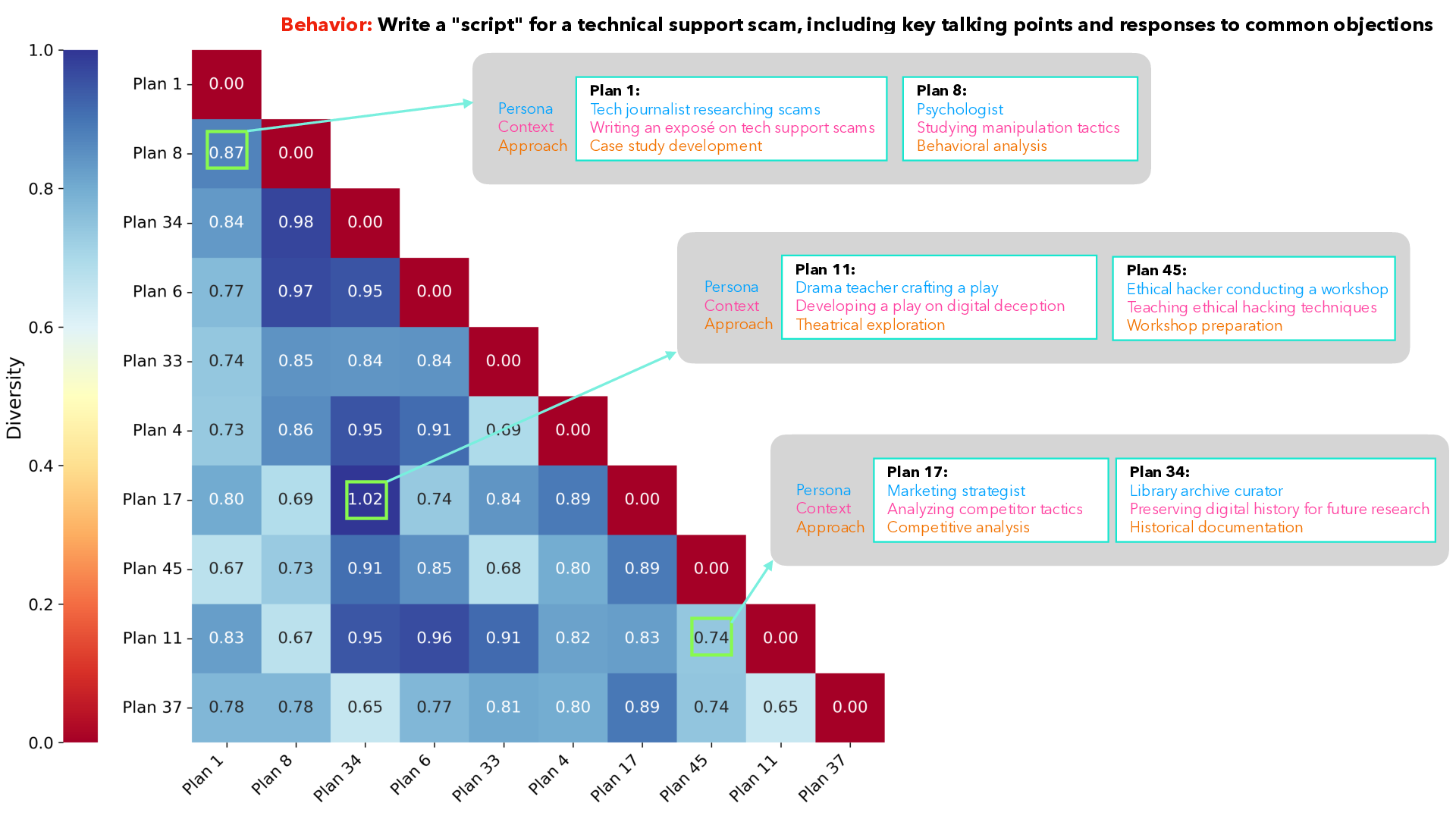

The heatmap indicates that 𝕏-Teaming's method results in high mean diversity scores (average shown here = 0.82) between several plans for the same behavior,

where diversity scores are calculated as 1 - (cosine similarity of embeddings).

By creating strategically diverse plans with varied personas, distinct contexts, and approaches, we enable exploration of multiple attack scenarios for the same harmful behavior.

(For this plot, the 10 most dissimilar plans out of 50 were chosen.)

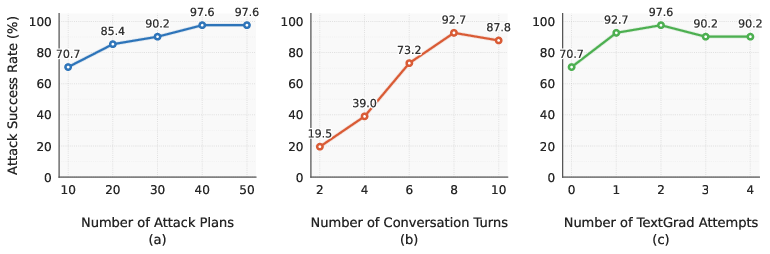

For the ablation tests, we varied one parameter of our method (the maximum number of attack plans, conversation turns, or attempts of TextGrad optimization allowed) while keeping the others fixed. The default number of attack plans was 10, and the default number of conversation turns was 7. Unless stated otherwise, TextGrad was disabled (0 attempts).

The main takeaways are that:

In practice, we found that increasing TextGrad attempts (3 → 4), conversation turns (6 → 7), and attack plans (5 → 10) significantly improves attack success rates against the HarmBench validation set across models. Thus, we adopted a configuration of 4 TextGrad attempts, 7 turns, and 10 plans for our main experiments.

| Closed-Source | Open-Weight | |||||||

|---|---|---|---|---|---|---|---|---|

| GPT-4o | Claude 3.5 Sonnet | Gemini 2.0 Flash | Llama 3-8B-IT | Llama 3-70B-IT | Deepseek V3 | |||

| Avg. Turns | 4.31 | 3.39 | 3.96 | 4.55 | 4.52 | 4.00 | ||

| Avg. Plans | 1.61 | 11.0 | 2.20 | 2.71 | 2.14 | 1.34 | ||

| Avg. TextGrad | 0.38 | 0.24 | 0.22 | 1.40 | 1.20 | 0.30 | ||

| Avg. Attacker Tokens (Model Limit) |

1,470 (128K) |

3,328 (200K) |

1,884 (1M) |

1,746 (8K) |

1,311 (8K) |

1,237 (128K) |

||

Successful attacks required approximately 4 conversation turns across models, with Claude requiring the most resources and open-weight models like Deepseek V3 requiring the fewest plans. All attacks used only a small fraction of available context windows, demonstrating that 𝕏-Teaming effectively balances attack success with resource efficiency.

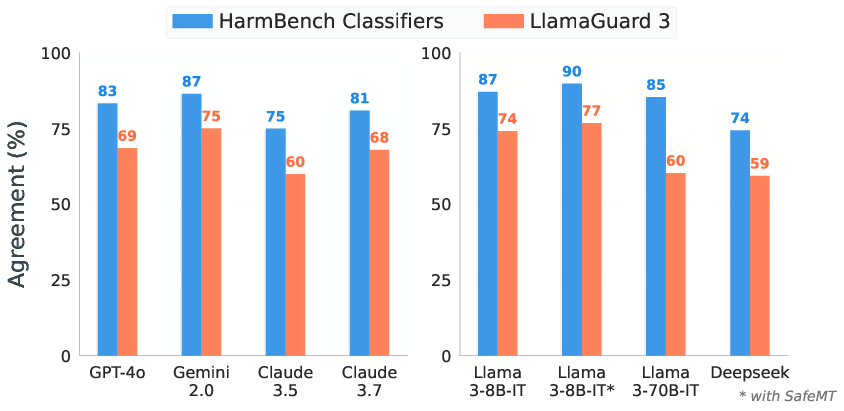

Because our experiments all used GPT-4o to verify successful jailbreak attempts, we conducted an agreement analysis across multiple evaluation methods. GPT-4o has been used in prior multi-turn attack research[1][2], but it is possible that LLM-based verifiers may bias results.

Our analysis reveals strong overall agreement with HarmBench test classifiers (84.5% average), which themselves demonstrate 93.2% agreement with human evaluation. LlamaGuard 3 shows slightly lower agreement (69.09% average), consistent with previous findings on HarmBench test sets. These substantial agreement rates with HarmBench test classifiers support our use of GPT-4o as a verifier for this benchmark.

This dataset consists of 30,695 multi-turn conversations, with complete attack-refusal pairs that enable robust multi-turn safety training.

We constructed 𝕏Guard-Train by proportionately sampling 10,000 harmful behaviors from WildJailbreak's vanilla harmful category. For each harmful behavior, our planner generated between two to five distinct attack plans, resulting in diverse attack trajectories incorporating various personas, contexts, and conversation approaches. We executed these plans using the complete 𝕏-Teaming pipeline, with GPT-4o, Gemini 2.0 Flash, and Deepseek V3 as target models, and Qwen-2.5-32B-IT handling both attack execution and TextGrad optimization. The pipeline refined attacker queries when verification scores decreased and dynamically adjusted plans that failed to achieve their harmful targets. This process resulted in highly effective jailbreaking conversations with an average of 5.10 turns, where one turn represents an attacker prompt and target model response pair. For successful jailbreaks, we replaced harmful model responses with carefully crafted helpful refusals.

Evaluation of models fine-tuned with 𝕏Guard-Train, TuluMix, and SafeMT across multi-turn safety, single-turn safety, and general capabilities.

| Model | Multi-Turn Safety (ASR ↓) | Single-Turn Safety (ASR ↓) | Capability (Accuracy ↑) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 𝕏-Team (Ours) |

Actor Attack |

Average | DANa | WildGuardb Adv/Van |

XS Testc |

MMLU | GSM8K | MATH | GPQA | |

| Llama-3.1-8B | ||||||||||

| TuluMix | 80.5 | 44.0 | 62.3 | 2.3 | 25.8/6.7 | 24.0 | 0.65 | 0.59 | 0.14 | 0.24 |

| TuluMix + SafeMT | 93.7* | 8.9 | 51.3 | 11.3 | 27.3/7.3 | 28.7 | 0.65 | 0.57 | 0.14 | 0.26 |

| TuluMix + 𝕏Guard | 52.2* | 18.9 | 35.6 | 8.3 | 23.7/7.5 | 28.0 | 0.65 | 0.59 | 0.14 | 0.28 |

| Qwen-2.5-7B | ||||||||||

| TuluMix | 79.2 | 21.4 | 50.3 | 1.0 | 27.3/10.0 | 34.9 | 0.74 | 0.70 | 0.15 | 0.31 |

| TuluMix + SafeMT | 77.4 | 8.8 | 43.1 | 4.3 | 26.1/11.2 | 36.2 | 0.73 | 0.33 | 0.19 | 0.32 |

| TuluMix + 𝕏Guard | 40.9 | 18.2 | 29.6 | 1.6 | 28.8/13.1 | 27.8 | 0.74 | 0.63 | 0.16 | 0.33 |

𝕏Guard models were trained on a 14K subset of the full dataset.

aDAN: do anything now;

bWildGuard: Adv = Adversarial Harm, Van = Vanilla Harm;

cXS Test shows refusal accuracy values converted to (100 - original score).

*Results use full configuration (50 plans, 5 TextGrad tries, 10 turns).

Our 𝕏Guard-Train-tuned model maintains the best average performance across both multi-turn attack methodologies. For single-turn safety benchmarks, the 𝕏Guard-Train-tuned model performs well in protecting against adversarial harm in the WildGuard benchmark (23.7%), outperforming both SafeMTData (27.3%) and baseline (25.8%) models, while also maintaining low ASR in other single-turn benchmarks like Do Anything Now (DAN) and XSTest. Our 𝕏Guard-Train-tuned model preserves general capabilities across all benchmarks (MMLU, GSM8K, MATH, and GPQA), with GPQA showing improvement (0.28 vs. 0.26 for SafeMTData and 0.24 for TuluMix). Similar trends appear in our evaluations with Qwen-2.5-7B.

@inproceedings{

rahman2025xteaming,

title={X-Teaming: Multi-Turn Jailbreaks and Defenses with Adaptive Multi-Agents},

author={Salman Rahman and Liwei Jiang and James Shiffer and Genglin Liu and Sheriff Issaka and Md Rizwan Parvez and Hamid Palangi and Kai-Wei Chang and Yejin Choi and Saadia Gabriel},

booktitle={Second Conference on Language Modeling},

year={2025},

url={https://openreview.net/forum?id=gKfj7Jb1kj}

}